Photo by B. Schilling

As of now, I have left university and am working on Sensor Intelligence.

Before, I was a PhD student and postdoctoral research assistant at the Humanoid Robots Laboratory of the University of Freiburg, where I received my PhD under the supervision of Maren Bennewitz in March 2014.

See Projects and Publications below for more details about my research. Some videos of my work are also available in our lab's YouTube-Channel.

You can find some of the code that I develop and maintain on the Code page.

Projects

The OctoMap library provides a compact and efficient data structure for robotic mapping in arbitrary 3D environments. I am the co-developer and current maintainer of the project, which is available open-source at GitHub under the BSD license.

As a member of the DFG-funded SFB/TR8 Spatial Cognition, I work on humanoid robot navigation in complex indoor environments.

I am the developer and maintainer of several drivers and other software that integrates the Nao humanoid robot into ROS, a free and open source robot operating system. In addition to the Nao ROS stack, I also maintain the humanoid_navigation stack and the octomap_mapping stack that integrates OctoMap into ROS.

During a research internship at Willow Garage in California I worked on the manipulation occupancy map collider and developed the 3d_navigation stack. This set of software packages enables a mobile manipulation robot like the PR2 to navigate in complex, threedimensional and cluttered environments.

Publications

You can also find my publications at

Google Scholar and DBPL.

2014

-

Armin Hornung, Sebastian Böttcher, Christian Dornhege, Andreas Hertle, Jonas Schlagenhauf, and Maren Bennewitz

Mobile Manipulation in Cluttered Environments with Humanoids: Integrated Perception, Task Planning, and Action Execution

In:

Proceedings of the IEEE-RAS International Conference on Humanoid Robots, 2014.

(Show abstract)

(Hide abstract)

(Show BibTeX)

(Hide BibTeX)

PDF

Video

To autonomously carry out complex mobile manipulation

tasks, a robot control system has to integrate several components for

perception, world modeling, action planning and replanning,

navigation, and manipulation. In this paper, we present a modular

framework that is based on the Temporal Fast Downward Planner and

supports external modules to control the robot. This allows to

tightly integrate individual sub-systems with the high-level symbolic

planner and enables a humanoid robot to solve challenging mobile

manipulation tasks. In the work presented here, we address mobile

manipulation with humanoids in cluttered environments, particularly

the task of collecting objects and delivering them to designated

places in a home-like environment while clearing obstacles out of the

way. We implemented our system for a Nao humanoid tidying up a

room, i.e., the robot has to collect items

scattered on the floor, move obstacles out of its way, and deliver the

objects to designated target locations. Despite the limited sensing

and motion capabilities of the low-cost platform, the experiments show

that our approach results in reliable task execution by applying

monitoring actions to verify object and robot states.

@INPROCEEDINGS{hornung14humanoids,

author = {Armin Hornung and Sebastian Boettcher and Christian Dornhege

and Andreas Hertle and Jonas Schlagenhauf and Maren Bennewitz},

title = {Mobile Manipulation in Cluttered Environments with Humanoids:

Integrated Perception, Task Planning, and Action Execution},

booktitle = {Proc.~of the IEEE-RAS International Conference on Humanoid

Robots (HUMANOIDS)},

year = 2014,

month = {November},

address = {Madrid, Spain},

}

-

Humanoid service robots promise a high adaptability to environments designed

for humans due to their human-like body layout. They are thus well-suited

to assist humans in domestic environments for everyday tasks or

providing elderly care, but also to replace human workers in hazardous environments.

The human-like structure allows versatile manipulation with two arms

as well as stepping over or onto obstacles with bipedal locomotion.

Compared to wheeled platforms, however, the kinematic structure of humanoids

requires active balancing while walking and allows only a limited payload, e.g.,

for additional sensors. Inaccurate motion execution can lead to foot slippage

and thus to a poor estimation of ego-motion. Furthermore, the high number of

degrees of freedom make planning and control a challenging problem for humanoid robots.

Autonomous navigation is a core capability of any service robot that performs

high-level tasks involving mobility. Before a robot can fulfill a task, it needs to know where it is

in the environment, what the environment looks like, and how it can successfully reach its goal.

In this thesis, we present several novel contributions to the field of

humanoid robotics with a focus on navigation in complex environments. We

hereby cope with challenges in the areas of 3D environment representations, localization,

perception, motion planning, and mobile manipulation.

As a basis, we first introduce a memory-efficient 3D environment representation

along with techniques for building three-dimensional maps. In this representation, our

probabilistic localization approach estimates the 6D pose of the humanoid based on

data of its noisy onboard sensors. We compare different range sensors as well

as sensor and motion models. For the critical task of climbing stairs,

we develop an improved particle filter that additionally integrates vision data for

a highly accurate pose estimate. We also present methods to perceive staircases in

3D range data. For reaching a navigation goal with a humanoid robot,

we introduce and compare different search-based footstep planning approaches

with anytime capabilities. We then investigate planning for the whole body of the humanoid

while considering constraints such as maintaining balance during a manipulation task.

This enables the robot to pick up objects or open doors. Finally,

we present an efficient approach for navigation in three-dimensional

cluttered environments that is particularly suited for mobile manipulation.

All techniques developed in this thesis were thoroughly evaluated both with

real robots and in simulation. Our contributions generally advance

the navigation capabilities of humanoid robots in complex

indoor environments.

@PHDTHESIS{hornung14phd,

author = {Armin Hornung},

title = {Humanoid Robot Navigation in Complex Indoor Environments},

school = {Albert-Ludwigs-Universit{\"a}t Freiburg},

year = {2014},

address = {Freiburg, Germany},

month = {March}

}

-

Accurate and reliable localization is a prerequisite for autonomously

performing high-level tasks with humanoid robots.

In this article, we present a probabilistic localization method for humanoid robots

navigating in arbitrary complex indoor environments using only onboard

sensing, which is a challenging task. Inaccurate motion execution of biped robots leads to an

uncertain estimate of odometry, and their limited payload constrains

perception to observations from lightweight and typically noisy sensors. Additionally, humanoids

do not walk on flat ground only and perform a swaying motion

while walking, which requires estimating a full 6D torso pose.

We apply Monte Carlo localization to globally

determine and track a humanoid's 6D pose in a given 3D world model, which

may contain multiple levels and staircases. We present an

observation model to integrate range measurements from a laser scanner or a depth camera as well as attitude data and

information from the joint encoders. To increase the localization

accuracy, e.g., while climbing stairs, we propose a further

observation model and additionally use monocular vision data

in an improved proposal distribution.

We demonstrate the effectiveness of our methods in extensive

real-world experiments with a Nao humanoid. As the experiments illustrate, the robot is able to

globally localize itself and accurately track its 6D pose while walking and climbing stairs.

@ARTICLE{hornung14ijhr,

author = {Armin Hornung, Stefan Osswald, Daniel Maier, Maren Bennewitz},

title = {Monte Carlo Localization for Humanoid Robot Navigation in

Complex Indoor Environments},

journal = {International Journal of Humanoid Robotics},

volume = {11},

number = {02},

year = {2014},

month= {June},

doi = {10.1142/S0219843614410023},

}

2013

-

-

Efficient footstep planning for humanoid navigation through cluttered

environments is still a challenging problem. Often, obstacles create local

minima in the search space, forcing heuristic planners such as A* to expand

large areas. Furthermore, planning longer footstep paths often takes a long time

to compute. In this work, we introduce and discuss several solutions to these

problems. For navigation, finding the optimal path initially is often not needed

as it can be improved while walking. Thus, anytime search-based planning based

on the anytime repairing A* or randomized A* search provides promising

functionality. It allows to obtain efficient paths with provable suboptimality

within short planning times. Opposed to completely randomized methods, anytime

search-based planners generate paths that are goal-directed and guaranteed to be

no more than a certain factor longer than the optimal solution. By adding new

stepping capabilities and accounting for the whole body of the robot in the

collision check, we extend the footstep planning approach to 3D. This enables a

humanoid to step over clutter and climb onto obstacles. We thoroughly evaluated

the performance of search-based planning in cluttered environments and for

longer paths. We furthermore provide solutions to efficiently plan long

trajectories using an adaptive level-of-detail planning approach.

@INPROCEEDINGS{hornung13icraws,

author = {Armin Hornung and Daniel Maier and Maren Bennewitz},

title = {Search-Based Footstep Planning},

booktitle = {Proc.~of the ICRA Workshop on Progress and Open Problems in

Motion Planning and Navigation for Humanoids},

year = 2013,

month = {May},

address = {Karlsruhe, Germany}

}

-

Humanoid service robots performing complex object manipulation tasks

need to plan whole-body motions that satisfy a variety of

constraints: The robot must keep its balance, self-collisions and

collisions with obstacles in the environment must be avoided and, if

applicable, the trajectory of the end-effector must follow the

constrained motion of a manipulated object in Cartesian space. These

constraints and the high number of degrees of freedom make

whole-body motion planning for humanoids a challenging problem. In

this paper, we present an approach to whole-body motion planning

with a focus on the manipulation of articulated objects such as doors

and drawers. Our approach is based on rapidly-exploring random

trees in combination with inverse kinematics and considers all

required constraints during the search. Models of articulated

objects hereby generate hand poses for sampled configurations along

the trajectory of the object handle. We thoroughly evaluated

our planning system and present experiments with a Nao humanoid

opening a drawer, a door, and picking up an object.

The experiments demonstrate the ability

of our framework to generate solutions to complex planning

problems and furthermore show that these plans can be reliably

executed even on a low-cost humanoid platform.

@INPROCEEDINGS{burget13icra,

author = {Felix Burget and Armin Hornung and Maren Bennewitz},

title = {Whole-Body Motion Planning for Manipulation of Articulated Objects},

booktitle = {Proc.~of the IEEE International Conference on Robotics and

Automation (ICRA)},

year = 2013,

month = {May},

address = {Karlsruhe, Germany}

}

-

Three-dimensional models provide a volumetric representation of space

which is important for a variety of robotic applications including flying robots and robots that

are equipped with manipulators. In this paper, we present an

open-source framework to generate volumetric 3D environment models. Our mapping approach

is based on octrees and uses probabilistic occupancy estimation. It

explicitly represents not only occupied space, but also free and unknown areas.

Furthermore, we propose an octree map compression method that keeps the 3D models

compact. Our framework is available as an open-source C++ library and

has already been successfully applied in several robotics projects. We

present a series of experimental results carried out with real robots

and on publicly available real-world

datasets. The results demonstrate that our approach is able to update

the representation efficiently and models

the data consistently while keeping the memory requirement at a

minimum.

@ARTICLE{hornung13auro,

author = {Armin Hornung and Kai M. Wurm and Maren Bennewitz and Cyrill

Stachniss and Wolfram Burgard},

title = {{OctoMap}: An Efficient Probabilistic {3D} Mapping Framework Based

on Octrees},

journal = {Autonomous Robots},

year = 2013,

month= {April},

url = {http://octomap.github.com},

doi = {10.1007/s10514-012-9321-0},

note = {Software available at \url{http://octomap.github.com}},

volume = 34,

issue = 3,

pages = {189-206}

}

2012

-

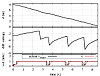

Efficient footstep planning for humanoid navigation through cluttered

environments is still a challenging problem. Many obstacles create local

minima in the search space, forcing heuristic planners such as A* to

expand large areas. The goal of this work is to efficiently

compute long, feasible footstep paths. For navigation, finding

the optimal path initially is often not needed as it can be improved while

walking. Thus, we propose

anytime search-based planning using the anytime repairing A* (ARA*) and

randomized A* (R*) planners. This allows to obtain efficient paths with

provable suboptimality within short planning times. Opposed to

completely randomized methods such as rapidly-exploring random

trees (RRTs), these planners create

paths that are goal-directed and guaranteed to be no more than a certain

factor longer than the optimal solution. We thoroughly evaluated the planners in various

scenarios using different heuristics. ARA* with the 2D

Dijkstra heuristic yields fast and efficient solutions but its potential

inadmissibility results in non-optimal paths for some scenarios. R*, on the other hand

borrows ideas from RRTs, yields fast solutions, and is less dependent

on a well-designed heuristic function. This allows it to avoid local

minima and reduces the number of expanded states.

@INPROCEEDINGS{hornung12humanoids,

author = {Armin Hornung and Andrew Dornbush and Maxim Likhachev and Maren

Bennewitz},

title = {Anytime Search-Based Footstep Planning with Suboptimality Bounds},

booktitle = {Proc.~of the IEEE-RAS International Conference on Humanoid

Robots (HUMANOIDS)},

year = 2012,

month = {November},

address = {Osaka, Japan}

}

-

In this paper, we present an integrated approach for robot

localization, obstacle mapping, and path planning in 3D environments

based on data of an onboard consumer-level depth camera. We rely on

state-of-the-art techniques for environment modeling and

localization, which we extend for depth camera data. We

thoroughly evaluated our system with a Nao humanoid equipped with

an Asus Xtion Pro Live depth camera on top of the humanoid's head and present navigation

experiments in a multi-level environment containing static and

non-static obstacles. Our approach performs in real-time, maintains

a 3D environment representation, and estimates the robot's pose in 6D.

As our results demonstrate, the depth camera is well-suited

for robust localization and reliable obstacle avoidance in

complex indoor environments.

@INPROCEEDINGS{maier12humanoids,

author = {Daniel Maier and Armin Hornung and Maren Bennewitz},

title = {Real-Time Navigation in {3D} Environments Based on Depth Camera Data},

booktitle = {Proc.~of the IEEE-RAS International Conference on Humanoid

Robots (HUMANOIDS)},

year = 2012,

month = {November},

address = {Osaka, Japan}

}

-

To fulfill high-level tasks, humanoid service robots must

be able to autonomously and robustly navigate in man-made

environments. These environments can be arbitrarily

complex, containing multiple levels and various types of

stairs or ramps connecting them. We previously presented

techniques for autonomously climbing spiral staircases with

humanoid robots. In this work, we extend this research

direction by walking down ramps.

On a Nao humanoid, we apply kinesthetic teaching to learn

single stepping motions for the ramp. As we show in the

experiments, by using the learned motions and integrating

monocular vision and inertial data, the Nao is able to

autonomously walk down a 2.10 m long ramp at an inclination

of 20 degrees. The accompanying video shows the complete

process of locating the beginning of the ramp using visual

observations, walking down with regular corrections based

on the inertial data, and finally determining the end of

the ramp by detecting the ending edge before exiting the

ramp.

@INPROCEEDINGS{lutz12iros,

author = {Christian Lutz and Felix Atmanspacher and Armin Hornung

and Maren Bennewitz},

title = {NAO Walking Down a Ramp Autonomously},

booktitle = {Video Proc.~of the IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS)},

year = 2012,

month = {October},

address = {Vilamoura, Portugal}

}

-

In order to successfully climb challenging staircases that

consist of many steps and contain difficult parts, humanoid

robots need to accurately determine their pose. In this

paper, we present an approach that fuses the robot's

observations from a 2D laser scanner, a monocular camera,

an inertial measurement unit, and joint encoders in order

to localize the robot within a given 3D model of the

environment. We develop an extension to standard Monte

Carlo localization (MCL) that draws particles from an

improved proposal distribution to obtain highly accurate

pose estimates. Furthermore, we introduce a new observation

model based on chamfer matching between edges in camera

images and the environment model. We thoroughly evaluate

our localization approach and compare it to previous

techniques in real-world experiments with a Nao humanoid.

The results show that our approach significantly improves

the localization accuracy and leads to a considerably more

robust robot behavior. Our improved proposal in combination

with chamfer matching can be generally applied to improve a

range-based pose estimate by a consistent matching of lines

obtained from vision.

@INPROCEEDINGS{osswald12iros,

author = {Stefan O{\ss}wald and Armin Hornung and Maren Bennewitz},

title = {Improved Proposals for Highly Accurate Localization Using Range

and Vision Data},

booktitle = {Proc.~of the IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS)},

year = 2012,

month = {October},

address = {Vilamoura, Portugal}

}

-

-

In this paper, we consider the problem of efficient path planning

for humanoid robots by combining grid-based 2D planning with footstep

planning. In this way, we exploit the advantages of both frameworks,

namely fast planning on grids and the ability to find solutions in

situations where grid-based planning fails. Our method computes a

global solution by adaptively switching between fast grid-based

planning in open spaces and footstep planning in the vicinity of

obstacles. To decide which planning framework to use, our approach

classifies the environment into regions of different complexity with

respect to the traversability. Experiments carried out in a

simulated office environment and with a Nao humanoid show that (i)

our approach significantly reduces the planning time compared to

pure footstep planning and (ii) the resulting plans are almost as

good as globally computed optimal footstep paths.

@INPROCEEDINGS{hornung12icra,

author = {Armin Hornung and Maren Bennewitz},

title = {Adaptive Level-of-Detail Planning for Efficient Humanoid Navigation},

booktitle = {Proc.~of the IEEE International Conference on Robotics and

Automation (ICRA)},

year = {2012},

month = {May},

address = {St. Paul, MN, USA}

}

-

Collision-free navigation in cluttered environments is essential for

any mobile manipulation system. Traditional navigation systems have

relied on a 2D grid map projected from a 3D representation for

efficiency. This approach, however, prevents navigation close to

objects in situations where projected 3D configurations are in

collision within the 2D grid map even if actually no collision

occurs in the 3D environment. Accordingly, when using such a 2D

representation for planning paths of a mobile manipulation robot,

the number of planning problems which can be solved is limited and

suboptimal robot paths may result. We present a fast, integrated

approach to solve path planning in 3D using a combination of an

efficient octree-based representation of the 3D world and an anytime

search-based motion planner. Our approach utilizes a combination of

multi-layered 2D and 3D representations to improve planning speed,

allowing the generation of almost real-time plans with bounded

sub-optimality. We present extensive experimental results with the

two-armed mobile manipulation robot PR2 carrying large objects in a

highly cluttered environment. Using our approach, the robot is able

to efficiently plan and execute trajectories while transporting

objects, thereby often moving through demanding, narrow passageways.

@INPROCEEDINGS{hornung12icra_pr2,

author = {Armin Hornung and Mike Phillips and E. Gil Jones and

Maren Bennewitz and Maxim Likhachev and Sachin Chitta},

title = {Navigation in Three-Dimensional Cluttered Environments

for Mobile Manipulation},

booktitle = {Proc.~of the IEEE International Conference on Robotics and

Automation (ICRA)},

year = {2012},

month = {May},

address = {St. Paul, MN, USA}

}

2011

-

@INPROCEEDINGS{bennewitz11humws,

author = {Maren Bennewitz and Daniel Maier and Armin Hornung and

Cyrill Stachniss},

title = {Integrated Perception and Navigation in Complex Indoor Environments},

booktitle = {Proc. of the HUMANOIDS 2011 workshop on Humanoid service

robot navigation in crowded and dynamic environments},

year = 2011,

month = {October},

address = {Bled, Slovenia}

}

-

In this paper, we consider the problem of building 3D models of

complex staircases based on laser range data acquired with a

humanoid. These models have to be sufficiently accurate to enable the

robot to reliably climb up the staircase. We evaluate two

state-of-the-art approaches to plane segmentation for humanoid

navigation given 3D range data about the environment. The first

approach initially extracts line segments from neighboring 2D~scan

lines, which are successively combined if they lie on the same

plane. The second approach estimates the main directions in the

environment by randomly sampling points and applying a clustering

technique afterwards to find planes orthogonal to the main directions. We

propose extensions for this basic approach to increase the robustness

in complex environments which may contain a large number of different

planes and clutter. In practical experiments, we thoroughly evaluate

all methods using data acquired with a laser-equipped Nao robot in a

multi-level environment. As the experimental results show, the

reconstructed 3D models can be used to autonomously climb up complex

staircases.

@INPROCEEDINGS{osswald11humanoids,

author = {Stefan O{\ss}wald and Jens-Steffen Gutmann and Armin Hornung

and Maren Bennewitz},

title = {From 3{D} Point Clouds to Climbing Stairs: A Comparison of Plane

Segmentation Approaches for Humanoids},

booktitle = {Proc.~of the IEEE-RAS International Conference

on Humanoid Robots (Humanoids)},

year = 2011,

month = {October},

address = {Bled, Slovenia}

}

-

Armin Hornung, E. Gil Jones, Sachin Chitta, Maren Bennewitz, Mike Phillips, and Maxim Likhachev.

Towards Navigation in Three-Dimensional Cluttered Environments.

In:

Abstract Proceedings of The PR2 Workshop:

Results, Challenges and Lessons Learned in Advancing Robots with a Common Platform at IROS 2011.

(Show BibTeX)

(Hide BibTeX)

@INPROCEEDINGS{hornung11irosws,

author = {A. Hornung and E. G. Jones and S. Chitta and M. Bennewitz and

M. Phillips and M. Likhachev},

title = {Towards Navigation in Three-Dimensional Cluttered Environments},

booktitle = {Proc. of the IROS 2011 PR2 Workshop: Results, Challenges and

Lessons Learned in Advancing Robots with a Common Platform },

year = 2011,

month = {September},

address = {San Francisco, CA, USA}

}

-

In this paper, we present an approach to enable a humanoid robot to

autonomously climb up spiral staircases. This task is substantially more challenging than climbing straight stairs since careful repositioning is needed. Our system globally

estimates the pose of the robot, which is subsequently refined by

integrating visual observations. In this way, the robot can

accurately determine its relative position with respect to the next

step. We use a 3D~model of the environment to project edges

corresponding to stair contours into monocular camera images. By

detecting edges in the images and associating them to projected

model edges, the robot is able to accurately locate itself towards

the stairs and to climb them. We present experiments carried out

with a Nao humanoid equipped with a 2D~laser range finder for global

localization and a low-cost monocular camera for short-range

sensing. As we show in the experiments, the robot reliably climbs up the steps of

a spiral staircase.

@INPROCEEDINGS{osswald11iros,

author = {Stefan O{\ss}wald and Attila G{\"o}r{\"o}g

and Armin Hornung and Maren Bennewitz},

title = {Autonomous Climbing of Spiral Staircases with Humanoids},

booktitle = {Proc.~of the IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS)},

year = 2011,

month = {September},

address = {San Francisco, CA, USA}

}

-

Humanoid robots possess the capability of stepping over or onto

objects, which distinguishes them from wheeled robots. When planning paths for

humanoids, one therefore should consider an intelligent placement of footsteps

instead of choosing detours around obstacles. In this paper, we present an

approach to optimal footstep planning for humanoid robots. Since changes in the

environment may appear and a humanoid may deviate from its originally planned

path due to imprecise motion execution or slippage on the ground, the robot

might be forced to dynamically revise its plans. Thus, efficient methods for

planning and replanning are needed to quickly adapt the footstep paths to new

situations. We formulate the problem of footstep planning so that it can be

solved with the incremental heuristic search method D* Lite and present our

extensions, including continuous footstep locations and efficient collision

checking for footsteps. In experiments in simulation and with a real Nao

humanoid, we demonstrate the effectiveness of the footstep plans computed and

revised by our method. Additionally, we evaluate different footstep sets and

heuristics to identify the ones leading to the best performance in terms of path

quality and planning time. Our D* Lite algorithm for footstep planning is

available as open source implementation.

@INPROCEEDINGS{garimort11icra,

author = {Johannes Garimort and Armin Hornung and Maren Bennewitz},

title = {Humanoid Navigation with Dynamic Footstep Plans},

booktitle = {Proc.~of the IEEE International Conference on Robotics and

Automation (ICRA)},

year = 2011,

month = {May},

address = {Shanghai, China}

}

2010

-

In this paper, we present a localization method for humanoid robots

navigating in arbitrary complex indoor environments using only onboard

sensing. Reliable and accurate

localization for humanoid robots operating in such environments is a

challenging task. First, humanoids typically execute motion

commands rather inaccurately and odometry can be estimated only very

roughly. Second, the observations of the small and lightweight

sensors of most humanoids are seriously affected by noise. Third,

since most humanoids walk with a swaying motion and can freely move in the environment,

e.g., they are not forced to walk on flat ground only, a 6D torso pose has

to be estimated. We apply Monte Carlo localization to globally

determine and track a humanoid's 6D pose in a 3D world model, which

may contain multiple levels connected by staircases. To

achieve a robust localization while walking and climbing stairs, we

integrate 2D laser range measurements as well as attitude data and

information from the joint encoders. We present simulated as well as

real-world experiments with our humanoid and thoroughly evaluate our

approach. As the experiments illustrate, the robot is able to

globally localize itself and accurately track its 6D pose over time.

@INPROCEEDINGS{hornung10iros,

author = {Armin Hornung and Kai M. Wurm and Maren Bennewitz},

title = {Humanoid Robot Localization in Complex Indoor Environments},

booktitle = {Proc.~of the IEEE/RSJ International Conference on Intelligent

Robots and Systems (IROS)},

year = 2010,

address = {Taipei, Taiwan},

month= {October}

}

-

Armin Hornung,

Maren Bennewitz,

and

Wolfram Burgard.

Learning Efficient Vision-based Navigation.

In:

Abstract Proceedings of the International Conference on Indoor Positioning and Indoor Navigation

(IPIN), 2010.

-

The majority of navigation algorithms for mobile robots assume that

the robots possess enough computational or memory resources to carry

out the necessary calculations. Especially small and lightweight

devices, however, are resource-constrained and have only restricted

capabilities. In this paper, we present a reinforcement learning

approach for mobile robots that considers the imposed constraints

on their sensing capabilities and computational resources,

so that they can reliably and efficiently fulfill their navigation tasks.

Our technique learns a policy that optimally trades off the speed of the robot and the

uncertainty in the observations imposed by its movements.

It furthermore enables the robot to learn an efficient

landmark selection strategy to compactly model the environment. We

describe extensive simulated and real-world experiments carried out

with both wheeled and humanoid robots which demonstrate

that our learned navigation policies significantly outperform

strategies using advanced and manually optimized heuristics.

@INPROCEEDINGS{hornung10erlars,

author = {Armin Hornung and Maren Bennewitz and Cyrill Stachniss and

Hauke Strasdat and Stefan O{\ss}wald and Wolfram Burgard},

title = {Learning Adaptive Navigation Strategies for Resource-constrained

Systems},

booktitle = {Proc.~of the 3rd Int.~Workshop on Evolutionary and Reinforcement

Learning for Autonomous Robot Systems (ERLARS)},

address = {Lisbon, Portugal},

year = 2010,

month = {August}

}

-

-

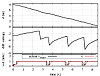

In this article, we present a novel approach to learning efficient

navigation policies for mobile robots that use visual features for

localization. As fast movements of a mobile robot typically introduce

inherent motion blur in the acquired images, the uncertainty of the

robot about its pose increases in such situations. As a result, it

cannot be ensured anymore that a navigation task can be executed

efficiently since the robot's pose estimate might not correspond to

its true location. We present a reinforcement learning

approach to determine a navigation policy to reach the destination

reliably and, at the same time, as fast as possible. Using our

technique, the robot learns to trade off velocity against localization

accuracy and implicitly takes the impact of motion blur on

observations into account. We furthermore developed a method to

compress the learned policy via a clustering approach. In this way,

the size of the policy representation is significantly reduced, which

is especially desirable in the context of memory-constrained systems.

Extensive simulated and real-world experiments carried out with two

different robots demonstrate that our learned policy significantly

outperforms policies using a constant velocity and more advanced

heuristics. We furthermore show that the policy is generally

applicable to different indoor and outdoor scenarios with varying

landmark densities as well as to navigation tasks of different complexity.

@ARTICLE{hornung10ar,

author = {Armin Hornung and Maren Bennewitz and Hauke Strasdat},

title = {Efficient Vision-based Navigation -- {L}earning about the Influence

of Motion Blur},

journal = {Autonomous Robots},

year = 2010,

volume = 29,

issue = 2,

pages = {137-149},

doi = {http://dx.doi.org/10.1007/s10514-010-9190-3}

}

-

In this paper, we present an approach for modeling 3D environments

based on octrees using a probabilistic occupancy estimation. Our

technique is able to represent full 3D models including free and

unknown areas. It is available as an open-source library to

facilitate the development of 3D mapping systems. We

also provide a detailed review of existing approaches to 3D

modeling. Our approach was thoroughly evaluated using different

real-world and simulated datasets. The results demonstrate that our

approach is able to model the data probabilistically while, at the

same time, keeping the memory requirement at a minimum.

@inproceedings{wurm10icraws,

author = {K. M. Wurm and A. Hornung and

M. Bennewitz and C. Stachniss and W. Burgard},

title = {{OctoMap}: A Probabilistic, Flexible, and Compact {3D} Map

Representation for Robotic Systems},

booktitle = {Proc. of the ICRA 2010 Workshop on Best Practice in

3D Perception and Modeling for Mobile Manipulation},

year = 2010,

month = may,

address = {Anchorage, AK, USA},

url = {http://octomap.github.com},

note = {Software available at \url{http://octomap.github.com}}

}

-

Reliable and efficient navigation with a humanoid robot is a

difficult task. First, the motion commands are executed rather

inaccurately due to backlash in the joints or foot slippage. Second,

the observations are typically highly affected by noise due to the

shaking behavior of the robot. Thus, the localization performance

is typically reduced while the robot moves and the uncertainty about

its pose increases. As a result, the reliable and efficient execution of a

navigation task cannot be ensured anymore since the

robot's pose estimate might not correspond to the true location. In

this paper, we present a reinforcement learning approach to select

appropriate navigation actions for a humanoid robot equipped with a

camera for localization. The robot learns to reach the destination

reliably and as fast as possible, thereby choosing actions to

account for motion drift and trading off velocity in terms of fast

walking movements against accuracy in localization. We present

extensive simulated and practical experiments with a humanoid robot

and demonstrate that our learned policy significantly outperforms a

hand-optimized navigation strategy.

@INPROCEEDINGS{osswald10icra,

author = {Stefan O{\ss}wald and Armin Hornung and Maren Bennewitz},

title = {Learning Reliable and Efficient Navigation with a Humanoid},

booktitle = {Proc.~of the IEEE International Conference on Robotics and

Automation (ICRA)},

year = {2010},

address = {Anchorage, AK, USA},

month = {May}

}

2009

-

Cameras are popular sensors for robot navigation tasks such as

localization as they are inexpensive, lightweight, and provide rich

data. However, fast movements of a mobile robot typically reduce

the performance of vision-based localization systems due to motion

blur. In this paper, we present a reinforcement learning approach

to choose appropriate velocity profiles for vision-based navigation.

The learned policy minimizes the time to reach the destination and

implicitly takes the impact of motion blur on observations into

account. To reduce the size of the resulting policies, which is

desirable in the context of memory-constrained systems, we compress

the learned policy via a clustering approach. Extensive simulated

and real-world experiments demonstrate that our learned policy

significantly outperforms any policy that uses a constant velocity.

We furthermore show, that our policy is applicable to different

environments. Additional experiments demonstrate that our

compressed policies do not result in a performance loss compared to

the originally learned policy.

@INPROCEEDINGS{hornung09iros,

author = {Armin Hornung and Hauke Strasdat and Maren Bennewitz and Wolfram

Burgard},

title = {Learning Efficient Policies for Vision-based Navigation},

booktitle = {Proc.~of the IEEE/RSJ International Conference on Intelligent

Robots and Systems (IROS)},

year = {2009},

address = {St. Louis, MO, USA},

month = {October}

}

-

Cameras are a useful sensor for mobile robot localization because they are relatively cheap, compact, and lightweight. This makes them attractive for robots with payload limitations, such as humanoid robots or small unmanned aerial vehicles. However, fast movements typically reduce the ability to use a vision-based localization due to motion blur.

In this thesis, we present a reinforcement learning approach for a robot learning a vision-based navigation policy. The learned policy minimizes the time to reach the destination and implicitly takes the impact of motion blur on landmark observations and thus on localization into account. Extensive simulated and real-world experiments show that our learned policy significantly outperforms any policy of moving with a constant velocity and is generally applicable to different environments. We experimentally determined the most relevant state features for the learning task.

Additionally, we present a method for compressing the learned policy with a clustering approach. While the size of the policy representation is drastically reduced, our experiments show that there is no loss of performance. This is especially valuable for memory-constrained systems.

@MASTERSTHESIS{hornung09diplom,

author = {Armin Hornung},

title = {Learning Policies for Reliable Mobile Robot Localization},

school = {Albert-Ludwigs-Universit{\"a}t Freiburg},

year = {2009},

type = {Diplomarbeit},

address = {Freiburg, Germany},

month = {January}

}

2008

-

Our table soccer robot can already challenge even professional human players. Next, the robot should play games by using human-like skills. As a foundation of this research, our table soccer game recorder can save and replay games played by humans. This video shows the construction and functionality of the recording system. We use three types of sensors mounted on a regular game table. The movement of a game rod is measured by an optical distance sensor. Its turning is observed by a magnetic rotary encoder. Two laser measurement systems are synchronized to determine the position of the ball. The raw sensor data is smoothed by an approach using multi-model Kalman filter. We developed several software modules for the system. The modules provide a basis for the future research.

@INPROCEEDINGS{zhang08iros,

author = {Zhang, Dapeng and Hornung, Armin},

title = {A Table Soccer Game Recorder},

booktitle = {Proc.~of the IEEE/RSJ International Conference on Intelligent

Robots and Systems (IROS)},

year = {2008},

address = {Nice, France},

month = {September}

}

-

In table soccer, humans can not always thoroughly observe fast actions like rod spins and kicks. However, this is necessary in order to detect rule violations for example for tournament play. We describe an automatic system using sensors on a regular soccer table to detect rule violations in realtime. Naive Bayes is used for kick classification, the parameters are trained using supervised learning. In the on-line experiments, rule violations were detected at a higher rate than by the human players. The implementation proved its usefulness by being used by humans in real games and sets a basis for future research using probability models in table soccer.

@INPROCEEDINGS{hornung08ki,

author = {Hornung, Armin and Zhang, Dapeng},

title = {On-Line Detection of Rule Violations in Table Soccer},

booktitle = {KI '08: Proceedings of the 31st annual German conference on

Advances in Artificial Intelligence},

year = {2008},

pages = {217--224},

address = {Berlin, Heidelberg},

month = {March},

publisher = {Springer-Verlag},

doi = {http://dx.doi.org/10.1007/978-3-540-85845-4_27},

isbn = {978-3-540-85844-7},

location = {Kaiserslautern, Germany}

}

-

Humans improve their sport skills by eliminating one recognizable

weakness at a time. Inspired by this observation, we

define a learning paradigm in which different learners can

be plugged together. An extra attention model is in charge

of iterating over them and chosing which one to improve

next. The paradigm is named Switching Attention Learning

(SAL). The essential idea is that improving one model in the

system generates more "improvement space" for the others.

Using SAL, an application for tracking the game ball in a

table soccer game-recorder is implemented. We developed

several models and learners which work together in the SAL

framework, producing satisfying results in the experiments.

The related problems, advantages, and perspective of the

switching attention learning are discussed in this paper.

@INPROCEEDINGS{zhang08ciras,

author = {Zhang, Dapeng and Nebel, Bernhard and Hornung, Armin},

title = {Switching Attention Learning -- A Paradigm for Introspection and

Incremental Learning},

booktitle = {Proc.~of the 5th International Conference on Computational

Intelligence, Robotics and Autonomous Systems (CIRAS)},

year = {2008},

address = {Linz, Austria},

month = {June}

}

2007

-

Armin Hornung.

Detecting Violations in Table Soccer Games Using Naive Bayes Classifiers.

Student project, Albert-Ludwigs-Universität, Freiburg, Germany 2007.

(Show abstract)

(Hide abstract)

In table soccer, humans can not always accurately observe fast actions like rod spins and kicks. However, this is necessary in order to detect rule violations for example for tournament play. This project describes an automatic referee using sensors on a regular soccer table to detect rule violations. The gap between noisy sensor data and the high-level concept of a kick is bridged by using naive Bayes classifiers for kick detection. Input to the classifiers are the coordinates of the ball relative to the kicking figure, modelled as Gaussian distributions. The classifier is trained offline using supervised learning. In the experiments, all rule violations were detected online. The implementation proved its usefulness by being used in regular games. Future work on segmenting table soccer games or sequence learning can benefit from the findings of this project by classifying game situations with the methods described here.

Armin Hornung, Sebastian Böttcher, Christian Dornhege, Andreas Hertle, Jonas Schlagenhauf, and Maren Bennewitz

Armin Hornung, Sebastian Böttcher, Christian Dornhege, Andreas Hertle, Jonas Schlagenhauf, and Maren Bennewitz Armin Hornung.

Armin Hornung. Armin Hornung, Stefan Osswald, Daniel Maier, and Maren Bennewitz

Armin Hornung, Stefan Osswald, Daniel Maier, and Maren Bennewitz

Armin Hornung, Daniel Maier, and Maren Bennewitz

Armin Hornung, Daniel Maier, and Maren Bennewitz Felix Burget, Armin Hornung, and Maren Bennewitz

Felix Burget, Armin Hornung, and Maren Bennewitz Armin Hornung, Kai M. Wurm, Maren Bennewitz, Cyrill Stachniss, and Wolfram Burgard

Armin Hornung, Kai M. Wurm, Maren Bennewitz, Cyrill Stachniss, and Wolfram Burgard Armin Hornung, Andrew Dornbush, Maxim Likhachev, and Maren Bennewitz.

Armin Hornung, Andrew Dornbush, Maxim Likhachev, and Maren Bennewitz. Daniel Maier, Armin Hornung, and Maren Bennewitz.

Daniel Maier, Armin Hornung, and Maren Bennewitz. Christian Lutz, Felix Atmanspacher, Armin Hornung, and Maren Bennewitz.

Christian Lutz, Felix Atmanspacher, Armin Hornung, and Maren Bennewitz. Stefan Oßwald, Armin Hornung, Maren Bennewitz.

Stefan Oßwald, Armin Hornung, Maren Bennewitz.

Armin Hornung and Maren Bennewitz.

Armin Hornung and Maren Bennewitz. Armin Hornung, Mike Phillips, E. Gil Jones, Maren Bennewitz, Maxim Likhachev, and Sachin Chitta.

Armin Hornung, Mike Phillips, E. Gil Jones, Maren Bennewitz, Maxim Likhachev, and Sachin Chitta. Maren Bennewitz, Daniel Maier, Armin Hornung, and Cyrill Stachniss.

Maren Bennewitz, Daniel Maier, Armin Hornung, and Cyrill Stachniss. Stefan Oßwald, Jens-Steffen Gutmann, Armin Hornung, and Maren Bennewitz.

Stefan Oßwald, Jens-Steffen Gutmann, Armin Hornung, and Maren Bennewitz. Armin Hornung, E. Gil Jones, Sachin Chitta, Maren Bennewitz, Mike Phillips, and Maxim Likhachev.

Armin Hornung, E. Gil Jones, Sachin Chitta, Maren Bennewitz, Mike Phillips, and Maxim Likhachev. Stefan Oßwald, Attila Görög, Armin Hornung, and Maren Bennewitz.

Stefan Oßwald, Attila Görög, Armin Hornung, and Maren Bennewitz. Johannes Garimort, Armin Hornung, and Maren Bennewitz.

Johannes Garimort, Armin Hornung, and Maren Bennewitz. Armin Hornung, Kai M. Wurm, and Maren Bennewitz.

Armin Hornung, Kai M. Wurm, and Maren Bennewitz. Armin Hornung, Maren Bennewitz, and Wolfram Burgard.

Armin Hornung, Maren Bennewitz, and Wolfram Burgard. Armin Hornung, Maren Bennewitz, Cyrill Stachniss, Hauke Strasdat, Stefan Oßwald, and Wolfram Burgard.

Armin Hornung, Maren Bennewitz, Cyrill Stachniss, Hauke Strasdat, Stefan Oßwald, and Wolfram Burgard. Armin Hornung and Maren Bennewitz.

Armin Hornung and Maren Bennewitz. Armin Hornung, Maren Bennewitz, and Hauke Strasdat.

Armin Hornung, Maren Bennewitz, and Hauke Strasdat. Kai M. Wurm, Armin Hornung, Maren Bennewitz, Cyrill Stachniss, and Wolfram Burgard.

Kai M. Wurm, Armin Hornung, Maren Bennewitz, Cyrill Stachniss, and Wolfram Burgard. Stefan Oßwald, Armin Hornung, and Maren Bennewitz.

Stefan Oßwald, Armin Hornung, and Maren Bennewitz. Armin Hornung, Hauke Strasdat, Maren Bennewitz, and Wolfram Burgard.

Armin Hornung, Hauke Strasdat, Maren Bennewitz, and Wolfram Burgard. Armin Hornung.

Armin Hornung. Dapeng Zhang and Armin Hornung.

Dapeng Zhang and Armin Hornung. Armin Hornung and Dapeng Zhang.

Armin Hornung and Dapeng Zhang. Dapeng Zhang, Bernhard Nebel and Armin Hornung.

Dapeng Zhang, Bernhard Nebel and Armin Hornung. Armin Hornung.

Armin Hornung.